In recent years, the combined progress of computational capabilities and algorithms has afforded reinforcement learning the ability to achieve the above expert-level human performance in tasks such as Atari and Go. Nonetheless, many of reinforcement learning approaches thrive primarily in well-defined mission scenarios. It still remains a challenge to learn in domains of high dimensionality (e.g., a robot equipped with many sensors), where tasks are durative, an agent receives sparse feedback, and sensory inputs found salient in one domain may be less relevant in another.

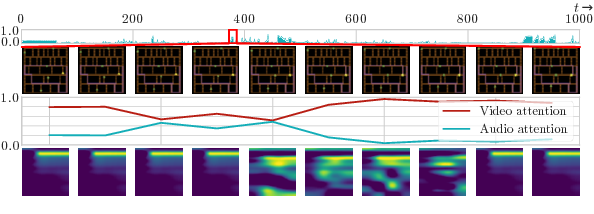

This work introduces the crossmodal learning paradigm and addresses the problem of learning in a high-dimensional domain with multiple sensory inputs. The main contribution is a hierarchical reinforcement learning approach where the learning agent exploits hierarchies in the dimensions of time and sensor modalities. The approach enables an agent to learn to focus on important latent features, while filtering irrelevant sensory modalities during execution. The benefit gained is more efficient use of the agent’s limited computational and storage resources (e.g., its finite-sized memory) during learning and execution. This work was presented at the NIPS 2017 Deep Reinforcement Learning symposium and Hierarchical Reinforcement Learning workshop, and was accepted to the AAMAS 2018 conference.